ANDROID MALWARE DETECTION USING STATIC AND DYNAMIC TECHNIQUES

2025-12-22 08:27:03 Views: 36

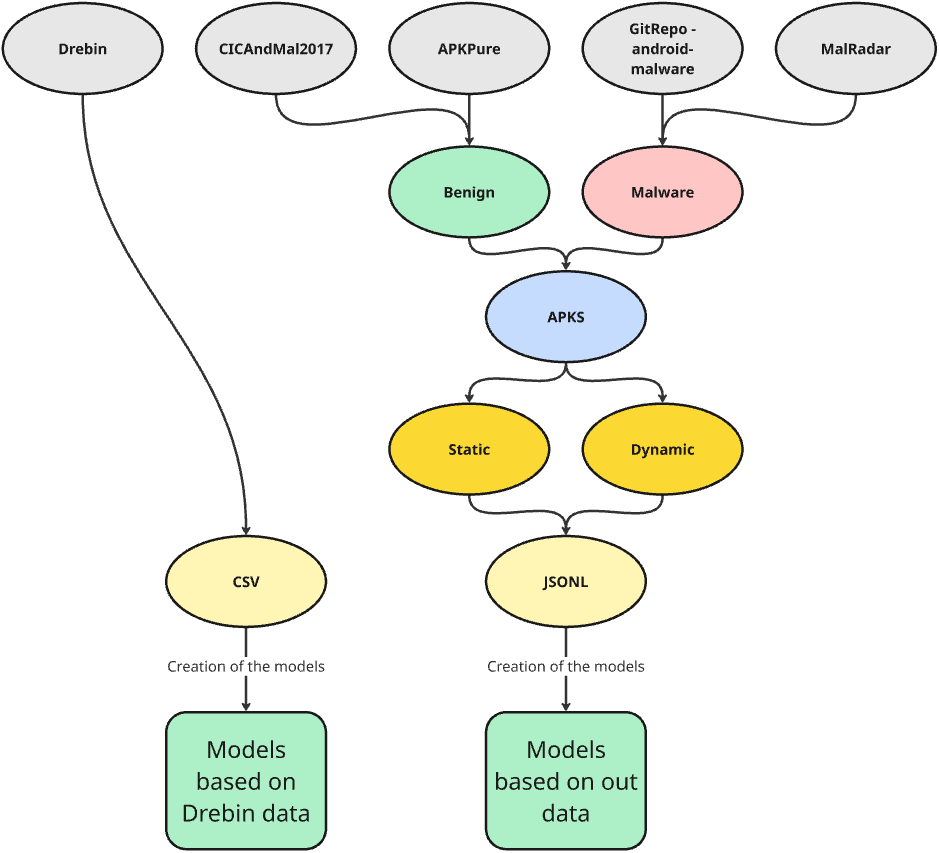

This school project focused on detecting Android malware by analyzing how apps are built and how they behave at runtime. Instead of relying on very large feature sets, the goal was to investigate whether a smaller and more meaningful set of features could still provide reliable and interpretable malware detection using machine learning .

Limitations: Dataset Size, Bias, and App Age

A significant limitation of this project was the relatively small number of applications available for dynamic analysis. Running apps in emulated environments is time-consuming and resource-intensive, which restricted the size of the dataset. As a result, the findings may be biased toward the specific applications that were analyzed and may not fully represent the broader Android ecosystem.

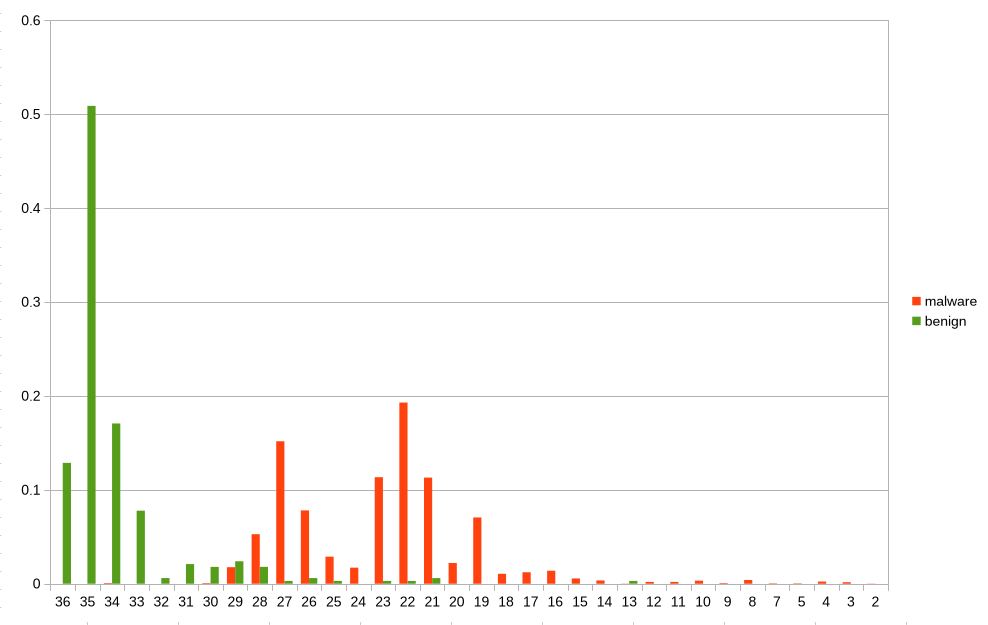

Another important challenge was the difference in collection dates between datasets. Many malicious and benign applications were collected years apart, meaning that some apps were built for older Android versions. This introduces bias, as older applications often request different permissions, use deprecated APIs, or behaviors that are no longer common in modern apps.

Image below shows the distribution of benign and malicious applications, and their targeted SDK version.

Additionally, some applications were simply outdated. Malware techniques, Android security mechanisms, and developer practices evolve rapidly, which means results obtained from older samples may not fully reflect current real-world threats. These factors limit the generalizability of the results and highlight the difficulty of conducting up-to-date Android malware research.

Static Analysis: What the App Looks Like

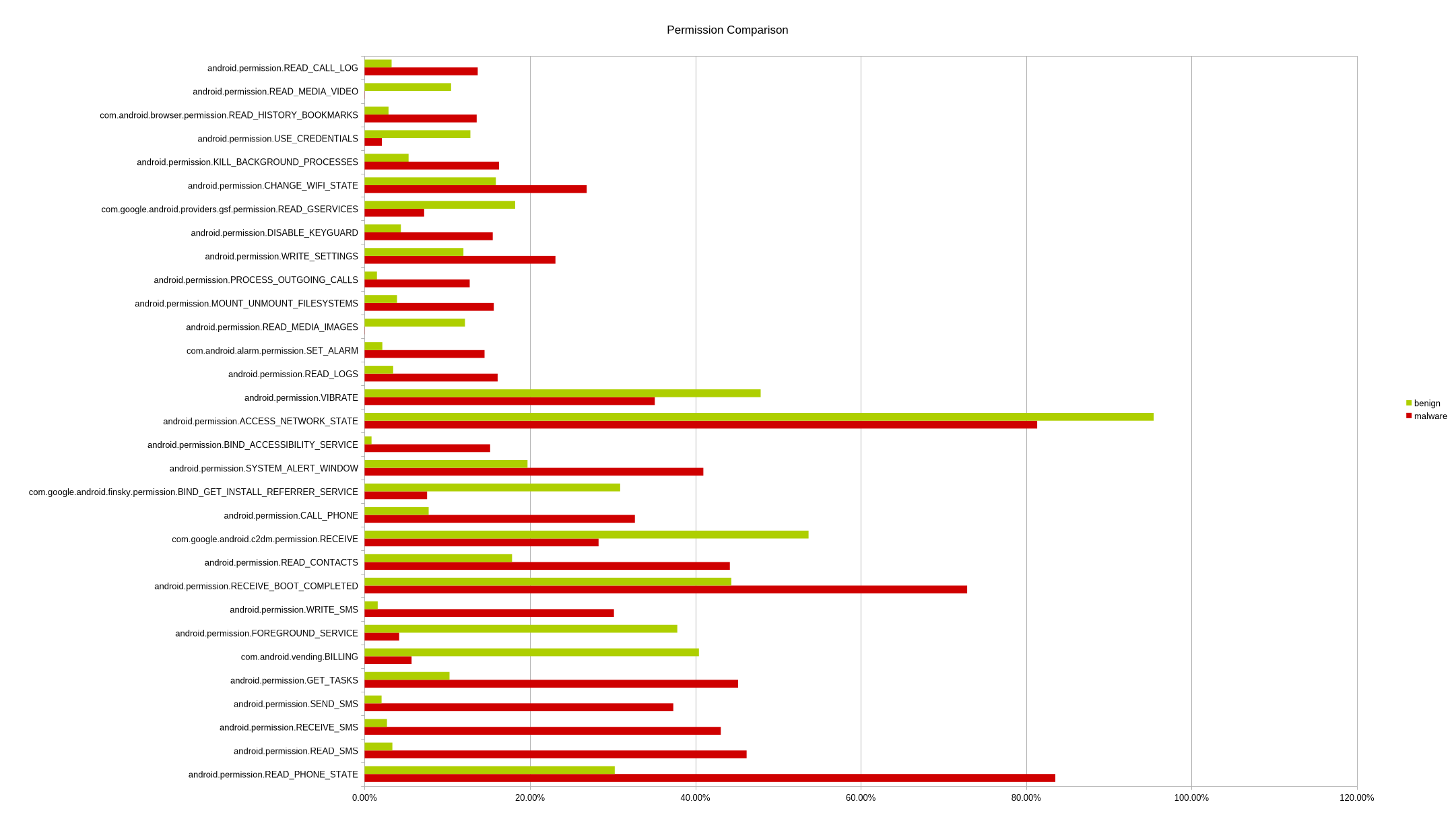

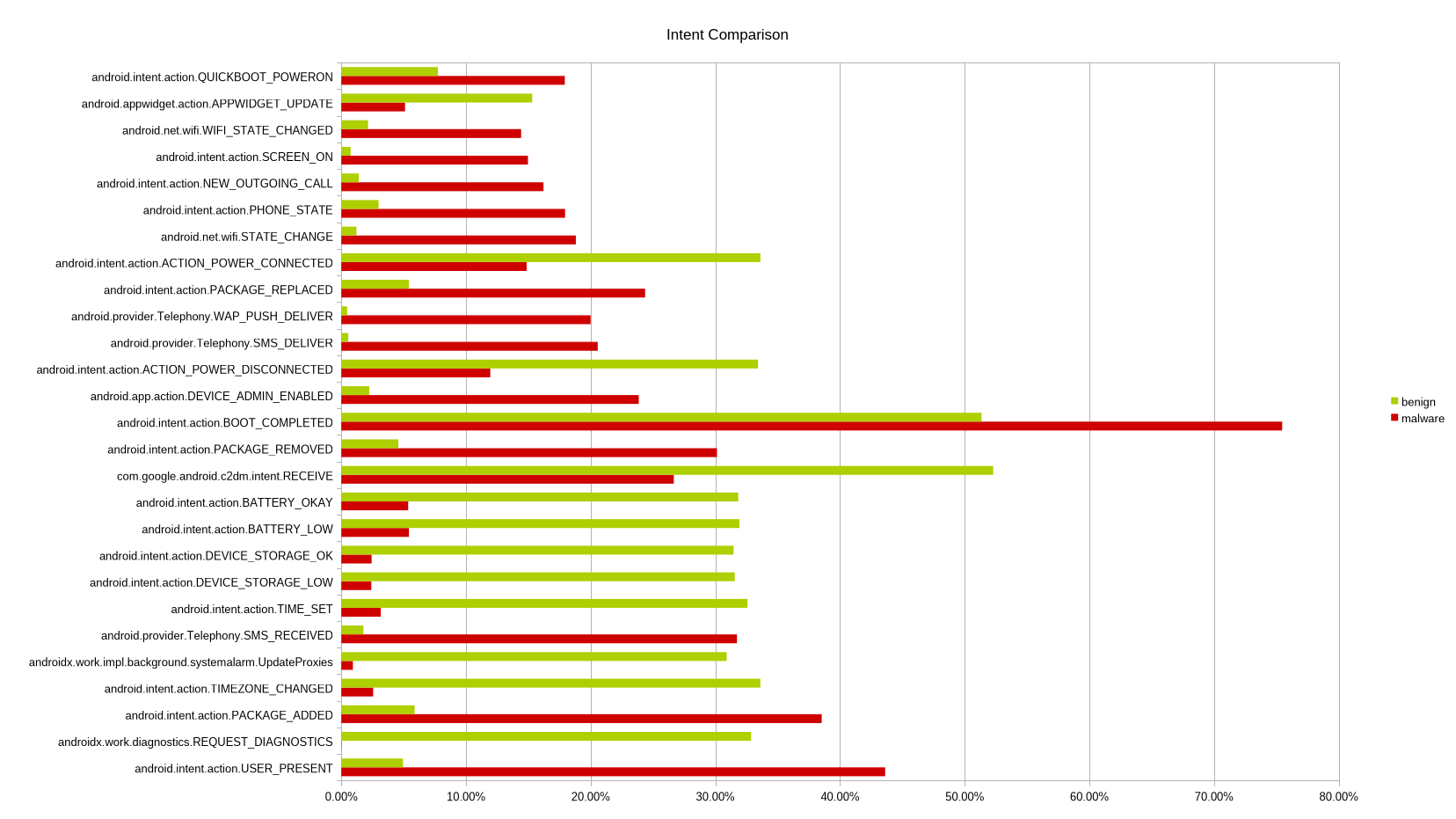

Static analysis examines an APK without running it. In this project, static features were extracted by unpacking APK files and analyzing elements such as permissions, intents, services, and application metadata. These features are easy to collect and scale well, which makes static analysis suitable for scanning large numbers of apps quickly .

Static analysis alone produced very strong results, especially when using carefully selected features such as permissions and intents. However, static methods have known weaknesses. They struggle with code obfuscation, encrypted payloads, and dynamic code loading, which are common techniques used by modern Android malware.

Dynamic Analysis: What the App Does

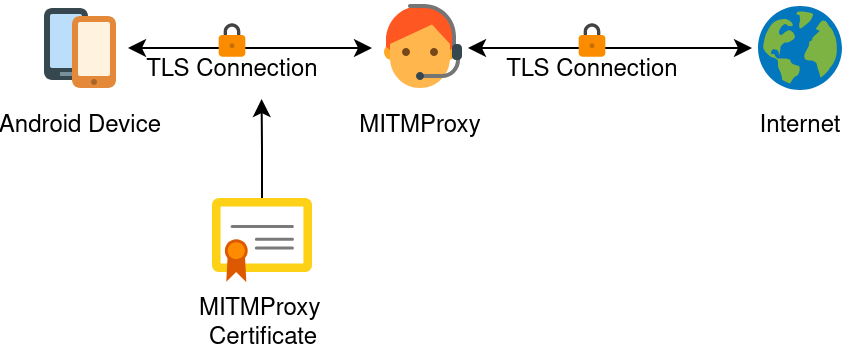

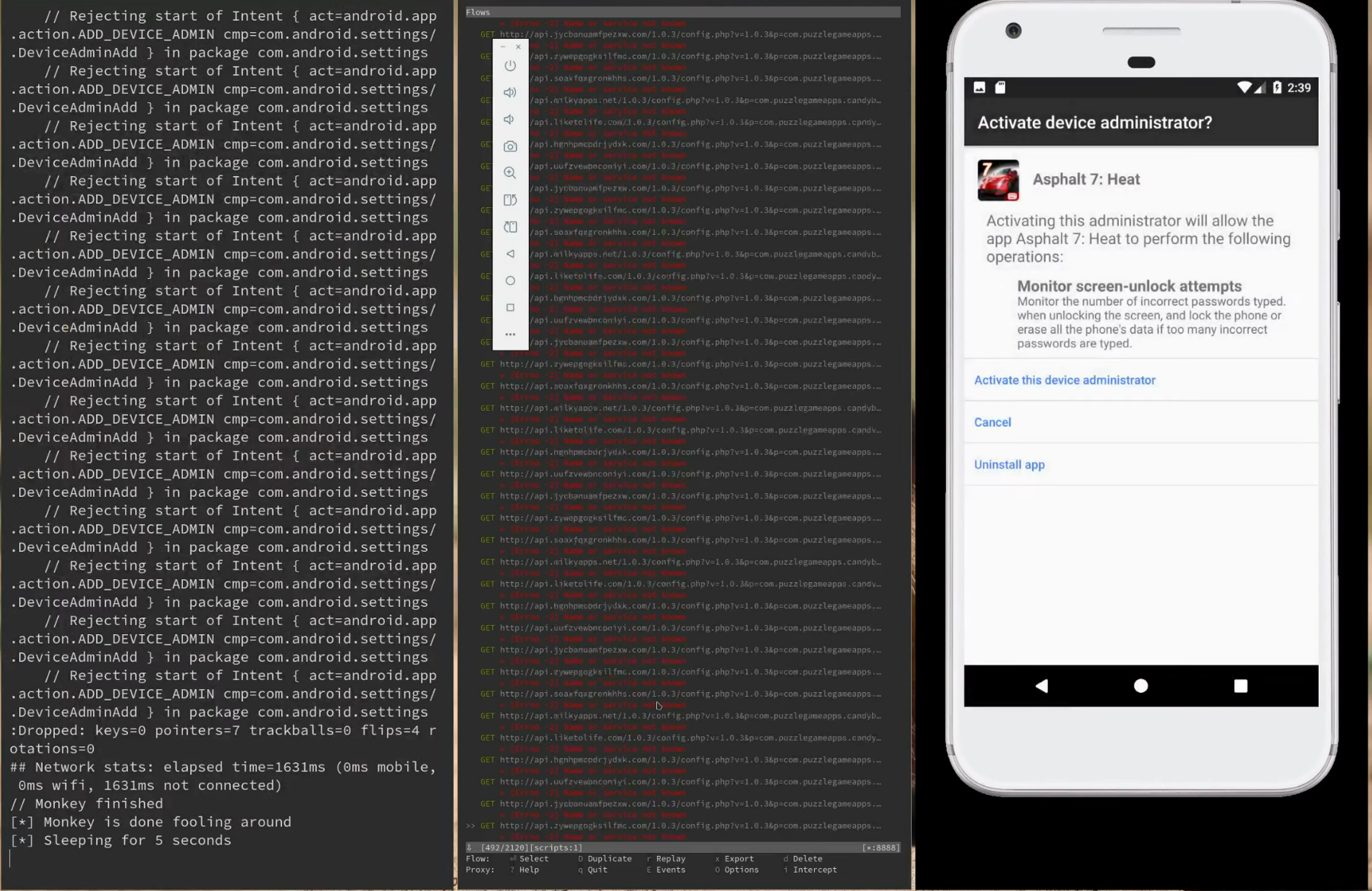

To address the limitations of static analysis, the project also implemented dynamic analysis. This involved running apps inside Android Virtual Devices (AVDs) and observing their behavior at runtime. Tools such as Monkey were used to simulate user interaction, while MitmProxy intercepted network traffic to capture communication patterns .

Dynamic features included network behavior, file activity, and runtime characteristics that cannot be seen in static code. These features provide stronger evidence of malicious intent, especially for apps that hide their behavior until execution.

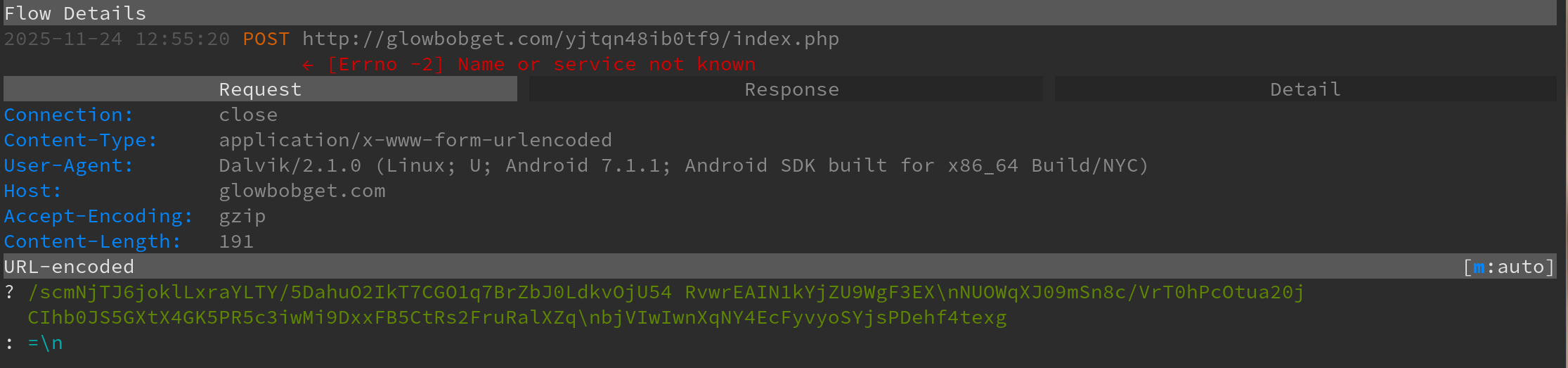

Entropy-Based Detection in Dynamic Analysis

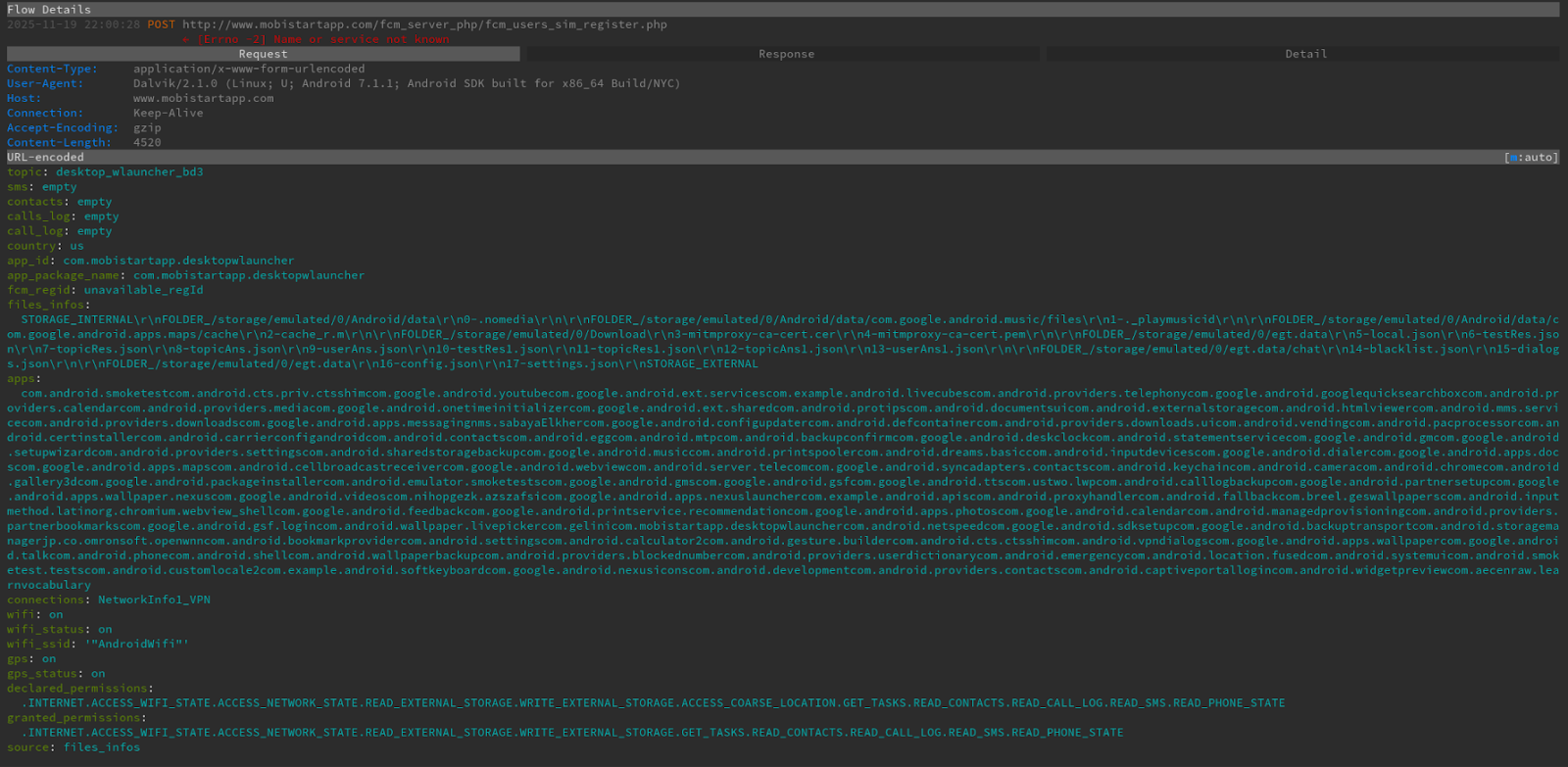

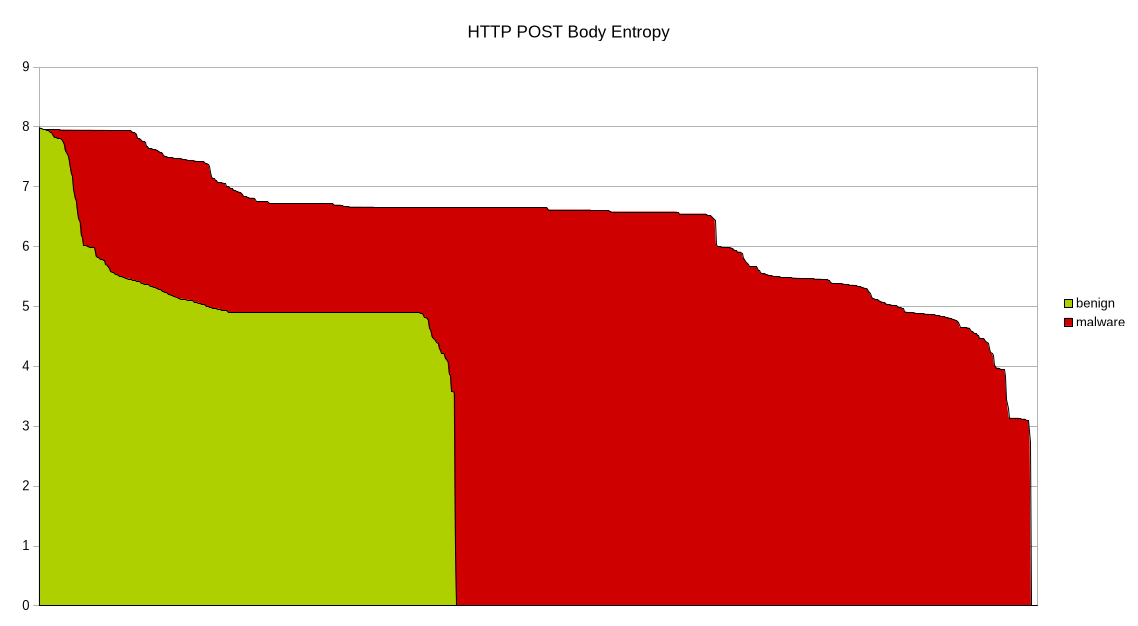

One key dynamic technique used in this project was entropy analysis. Entropy measures the randomness of data, and it is often higher in encrypted or obfuscated network traffic. Malware frequently communicates with remote servers using encrypted payloads to hide stolen data or commands.

During dynamic execution, network traffic generated by each app was captured and analyzed. Shannon entropy was calculated for transmitted data to detect unusually high randomness. Apps that consistently produced high-entropy traffic were flagged as suspicious, as this behavior is commonly associated with encrypted malicious communication rather than normal app traffic .

Entropy alone is not enough to label an app as malicious, but when combined with other dynamic indicators, it becomes a powerful signal. This approach allowed the system to detect malware that static analysis alone might miss.

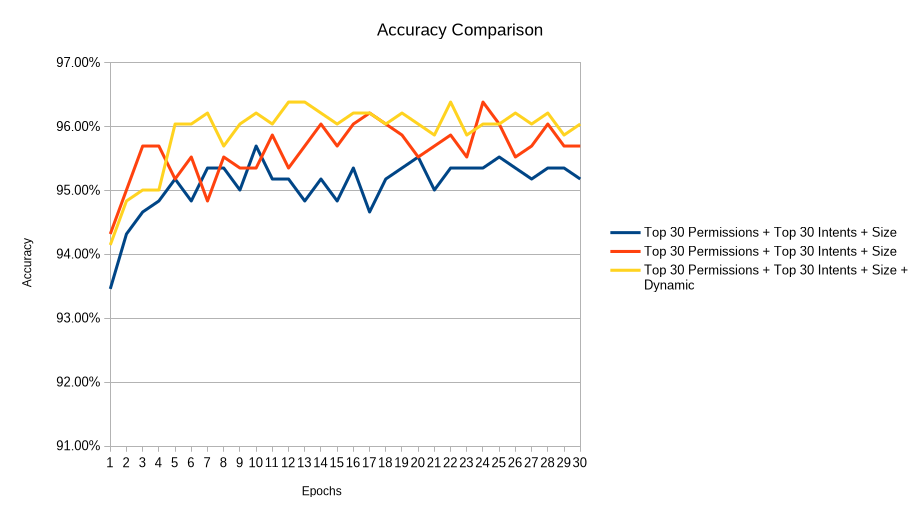

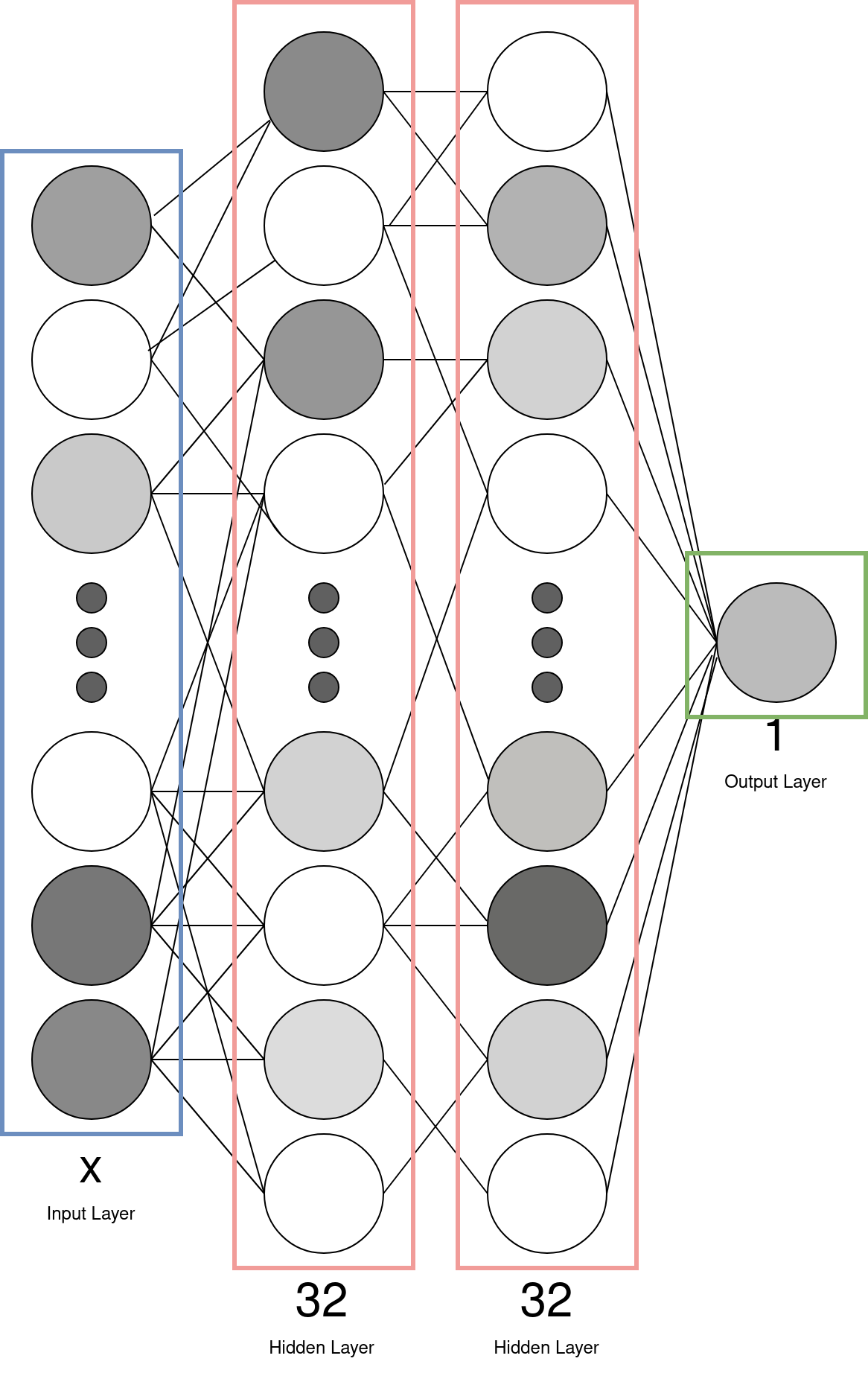

Comparing Static, Dynamic, and Hybrid Results

The project evaluated static-only, dynamic-only, and hybrid feature sets. Static models performed very well and achieved high accuracy with relatively low computational cost. Dynamic analysis provided deeper behavioral insight but required more resources and longer execution times.

The best overall results came from hybrid models that combined static features (such as top permissions and intents) with dynamic features (including entropy-based network behavior and file size). Some reduced hybrid configurations achieved accuracy close to 97% while using far fewer features than the full static dataset .